Posts in Category: Docker Container Virtualization

Core OS & Docker Container Virtualization

CoreOS is an open source lightweight operating system based on the Linux kernel and designed for providing infrastructure to clustered deployments, while focusing on automation, ease of applications deployment, security, reliability and scalability.

CoreOS is a powerful Linux distribution built to make large, scalable deployments on varied infrastructure simple to manage. Based on a build of Chrome OS, CoreOS maintains a lightweight host system and uses Docker containers for all applications. This system provides process isolation and also allows applications to be moved throughout a cluster easily.

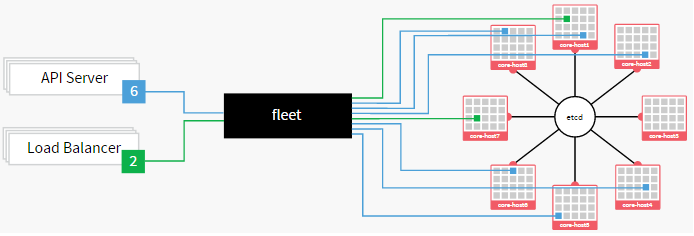

To manage these clusters, CoreOS uses a globally distributed key-value store called etcd to pass configuration data between nodes. This component is also the platform for service discovery, allowing applications to be dynamically configured based on the information available through the shared resource. Offering Linux as a service, CoreOS has become the preferred distro for Docker and may go a long way toward making data centers more cloudlike.

In order to schedule and manage applications across the entirety of the cluster, a tool called fleet is used. Fleet serves as a cluster-wide init system that can be used to manage processes across the entire cluster. This makes it easy to configure highly available applications and manage the cluster from a single point. It does this by tying into each individual node’s systemd init system.

CoreOS is a fork of Chrome OS, by the means of using its software development kit (SDK) freely available through Chromium OS as a base while adding new functionality and customizing it to support hardware used in servers.

As of July 2014, CoreOS is actively developed, primarily by Alex Polvi, Brandon Philips and Michael Marineau, with its major features (other than etcd and fleet) available as a stable release.

Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications. Consisting of Docker Engine, a portable, lightweight runtime and packaging tool, and Docker Hub, a cloud service for sharing applications and automating workflows, Docker enables apps to be quickly assembled from components and eliminates the friction between development, QA, and production environments. As a result, IT can ship faster and run the same app, unchanged, on laptops, data center VMs, and any cloud. Docker makes virtualization light, easy, and portable.

Docker is an open source framework that provides a lighter-weight type of virtualization, using Linux containers rather than virtual machines. Built on traditional Linux distributions such as Red Hat Enterprise Linux and Ubuntu, Docker lets you package applications and services as images that run in their own portable containers and can move between physical, virtual, and cloud foundations without requiring any modification. If you build a Docker image on an Ubuntu laptop or physical server, you can run it on any compatible Linux, anywhere.

In this way, Docker allows for a very high degree of application portability and agility, and it lends itself to highly scalable applications. However, the nature of Docker also leans toward running a single service or application per container, rather than a collection of processes, such as a LAMP stack. That is possible, but we will detail here the most common use, which is for a single process or service.

CoreOS allows you to set up your infrastructure in Docker containers, which may mean that you need to rethink the way your applications are built out. Basically, you would want to set up each “service”, like an app server or a database server, in its own Docker container. These Docker containers can be forwarded traffic from their host system if desired.

Docker has its own recommendations for how to handle things like databases inside containers and connecting applications to storage. What CoreOS allows you to do is manage your Docker-ized infrastructure in a reliable, scalable manner. So once you have containers configured to communicate with each other, you can tell CoreOS to deploy a certain number of them in a specific configuration across your cluster using fleet. The cluster can monitor downtime and automatically move over services when there are machine failures. If you have configured your containers to monitor etcd values and update their own configurations when a change is detected, you can get automatic service discovery.

Basically, CoreOS is an incredibly flexible platform that can be used to build up very scalable solutions. You may have to rearchitecture some of your application designs, but the benefits are usually worth the effort.

fleet is a cluster manager that controls systemd at the cluster level. To run your services in the cluster, you must submit regular systemd units combined with a few fleet-specific properties.

Features >>

> Deploy docker containers on arbitrary hosts in a cluster

> Distribute services across a cluster using machine-level anti-affinity

> Maintain N instances of a service, re-scheduling on machine failure

> Discover machines running in the cluster

> Automatically SSH into the machine running a job

With fleet, you can treat your CoreOS cluster as if it shared a single init system. It encourages users to write applications as small, ephemeral units that can easily migrate around a cluster of self-updating CoreOS machines.

By utilizing fleet, a devops team can focus on running containers that make up a service, without having to worry about the individual machines each container is running on. If your application consists of 5 containers, fleet will guarantee that they stay running somewhere on the cluster. If a machine fails or needs to be updated, containers running on that machine will be moved to other qualified machines in the cluster.